Who is SMASH Virtual?

SMASH Virtual was founded in 2022 and is Chicago’s largest LED virtual production studio. We serve film, television, and advertising and pride ourselves on holding our work to the highest standards. We’ve worked with Apple, Sony, Amazon, NBC, and more!

When we don’t have a production in the studio, we’re using the time to learn new tools and optimize our workflow. In the last year, Marmoset Toolbag has become a staple of our content creation pipeline–but with such a fast renderer, we wondered if it could create full digital backdrops in real-time.

The Rise of Virtual Production

Virtual production has become a multi-billion dollar subset of film, television, and commercial industries in the last few years. Initially pioneered by productions like Gravity and The Mandalorian, there are now hundreds of LED volume stages worldwide, including our own SMASH Virtual.

The technique involves placing a motion tracking system on a camera and sending that data to Unreal Engine. Unreal positions a virtual camera to match the physical camera, takes the rendered view from that camera, and “projects” it against a 3D model of an LED screen. Using a system called nDisplay, that view is split across multiple computers and output with frame-locked sync. This all happens in real-time, at 24 frames per second.

It’s a complicated process, but it is magic when it works. It transforms a simple LED screen into a time machine that can transport a film set to any time, any place. However, this process is computationally expensive and a lot of time is spent optimizing the 3D scenes.

Unreal Engine, while an amazing tool, also has its limitations as one of the few options for this process in the industry. Because of this, we spent a lot of time exploring alternatives to rely less on a single engine. We came across Marmoset Toolbag and decided to develop our workflow using this lightweight yet powerful software to provide high-fidelity rendering for our backdrops.

Using Toolbag in our Virtual Production Pipeline

Our first pass at this technique with Toolbag still relies on Unreal to handle the display output, but that is not strictly required – systems like Pixera, Disguise, Notch, etc. could be used instead. Unreal was just the quickest for a proof of concept.

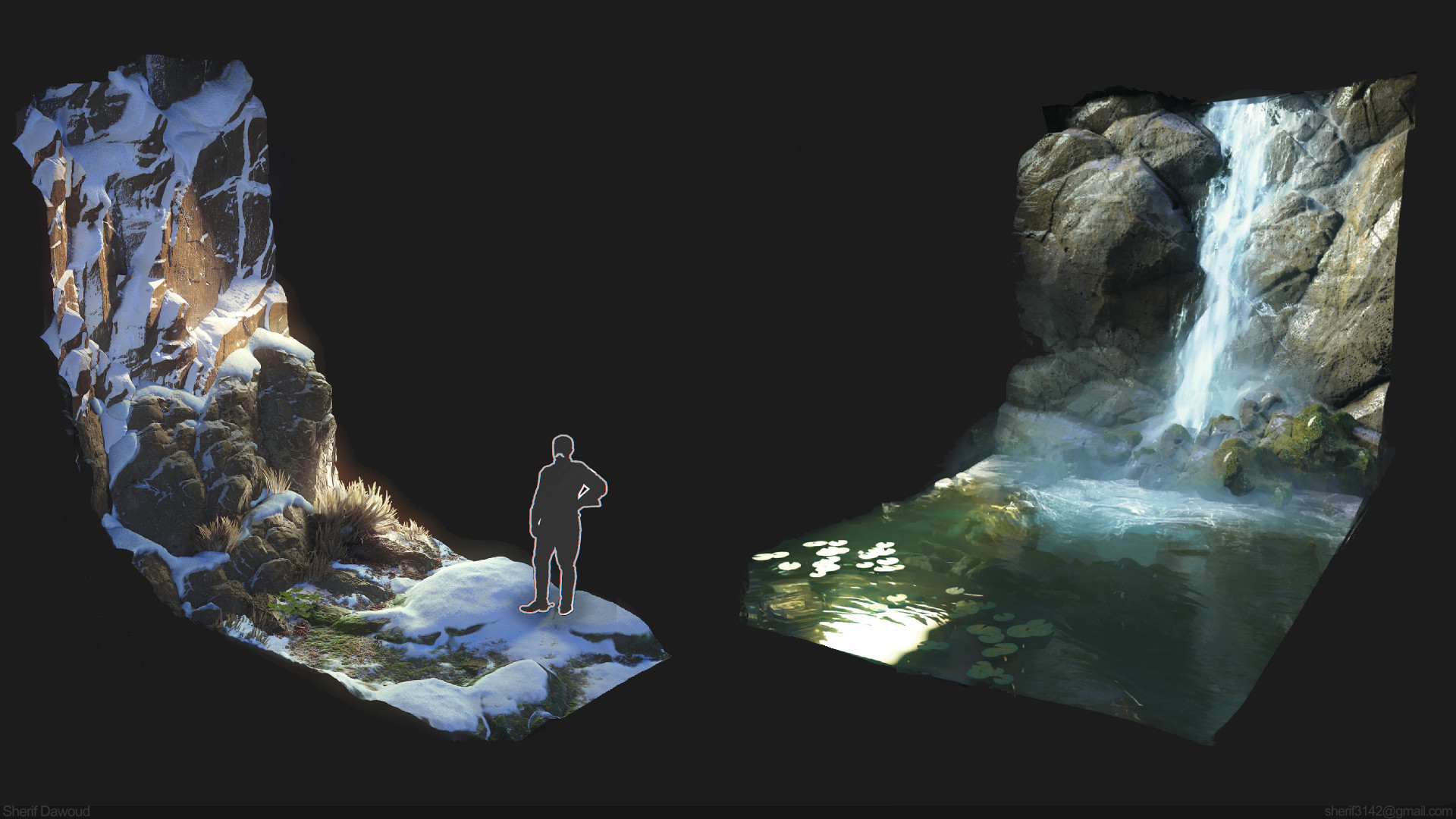

One alternative technique to a full 3D world is the so-called “2.5D.” technique. Usually, this involves going out and shooting a video plate of an actual location. A VFX team then cuts this video up into multiple transparent layers. Those layers are positioned in 3D space, allowing for a small 3D parallax as the camera moves. This process is used when camera movement, such as interviews or product shots, is limited. All of the lighting artifacts and aliasing issues that Unreal often has are gone. However, changing anything in the scene is impossible, as it’s simply a video clip.

Our idea was to use a separate piece of software to stage our 3D worlds. We then feed the camera tracking into that software and render a single high-quality still frame. This frame is then used for 2.5D in another system, in this case Unreal. This allows us to have the graphical fidelity of a 2.5D workflow with the flexibility of a 3D workflow. This works great as long as the camera doesn’t move too much!

Our prototype process is as follows:

- Camera tracking data (from our Optitrack system) is ingested by a program called Reality Field, which sends the data to Marmoset Toolbag via a custom script.

- When the custom script is run, Toolbag positions a camera based on the physical camera position sent from Reality Field.

- A ray-traced image is rendered from Toolbag.

- In Unreal, a textured 2D plane is offset a certain distance from a tracked camera. The plane itself is also tracked. The plane is textured with the rendered image from Marmoset.

- Tracking is frozen for the plane, so it is correctly “zeroed” relative to the camera position but static for shooting, allowing some parallax.

- Additional elements, like particle effects, foreground 3D models, and HDRIs, are added in Unreal. The process inside Unreal Engine remains the same at this point.

Why We Chose Toolbag

While a 3D modeling tool like Blender or Maya might seem a more obvious choice than Toolbag for this application, there are several key reasons why we opted for Toolbag:

- The streamlined UI only shows what we need, rather than a sea of endless checkboxes and dropdowns. It is absolutely critical in fast-paced, high-stress film set environments.

- The raster and the ray-traced renderer produce fantastic images much faster than other tools.

- Having texture baking and painting built-in makes it easy to finesse the look of 3D objects without leaving the software.

- The Python scripting allowed us to get up and running quickly.

- Industry-leading handling of USD, which allows near-perfect interoperability with Unreal.

Our Future Objectives

In the future, we would like to group objects in the scene into multiple layers (foreground, midground, and background) that could be placed on different planes, furthering the illusion of parallax. Of course, setting up Toolbag to do all of this with one click would make for an amazing workflow.

The ultimate goal is to cut out Unreal Engine entirely and create a viable alternative for ICVFX technology! That’s no easy feat, but as we’ve seen, Toolbag is already very close to this possibility.

We want to thank SMASH Virtual for sharing their experience with Toolbag. You can find more of SMASH Virtual’s work on their website. Explore the complete feature set of Toolbag using the 30-day trial.

If you’re interested in collaborating on a tutorial or breakdown article, please send us your pitch and a link to your artwork to submissions@marmoset.co.